Annoying and Cliched: Why ChatGPT Only Creates Titles With Colons

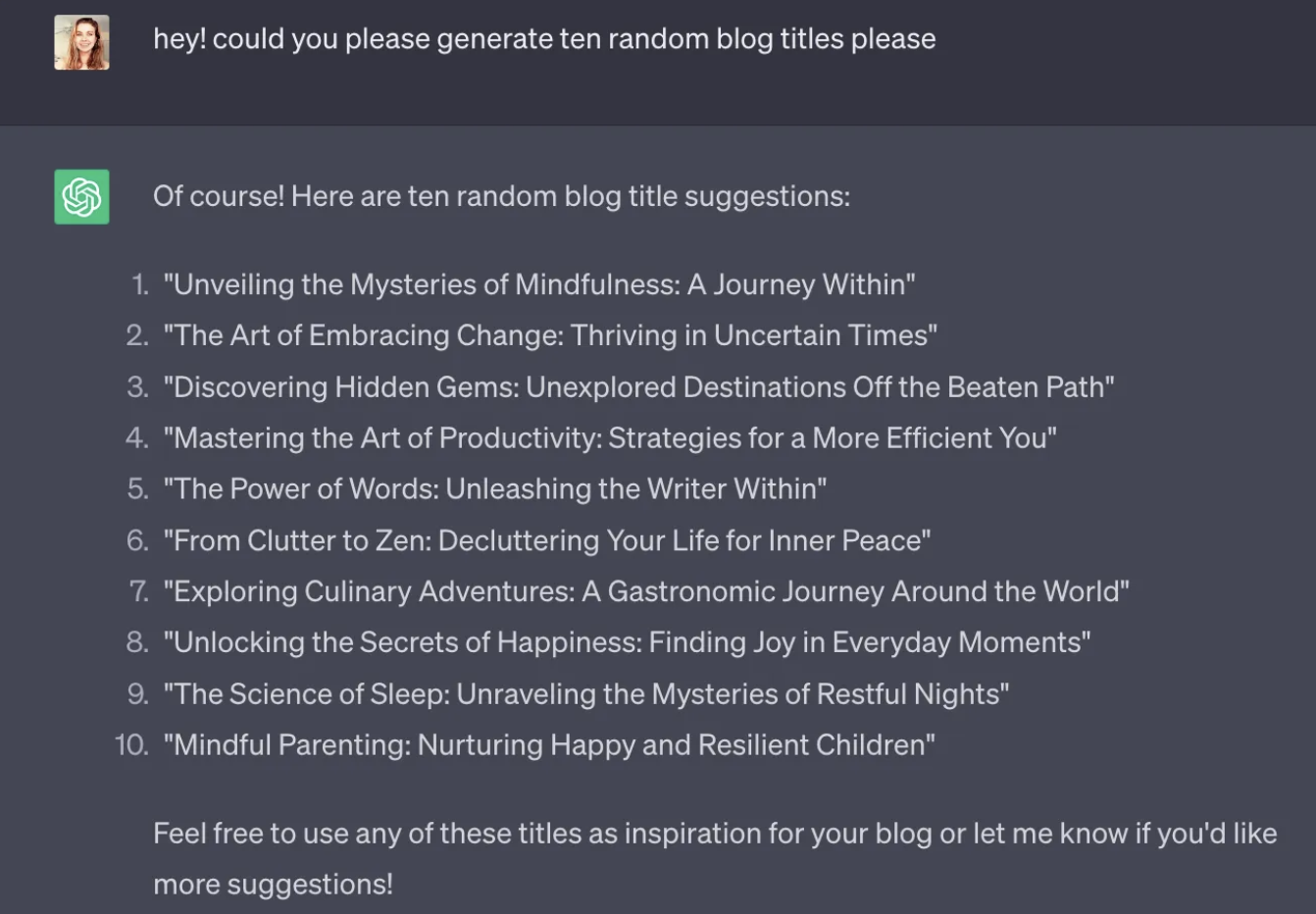

I don’t mind the odd headline with a colon. They can be useful to cut words and effectively communicate. But I do mind it when I ask ChatGPT to generate a random selection of ten blog post titles and it exclusively gives me titles with colons in them.

Screenshots from author

I get so annoyed with ChatGPT when it does this! This trend was so persistent, and my somewhat irrational hatred of it so strong, that I dedicated half a working day to discovering why on earth ChatGPT is so enamored with bisected titles, even when explicitly asked not to.

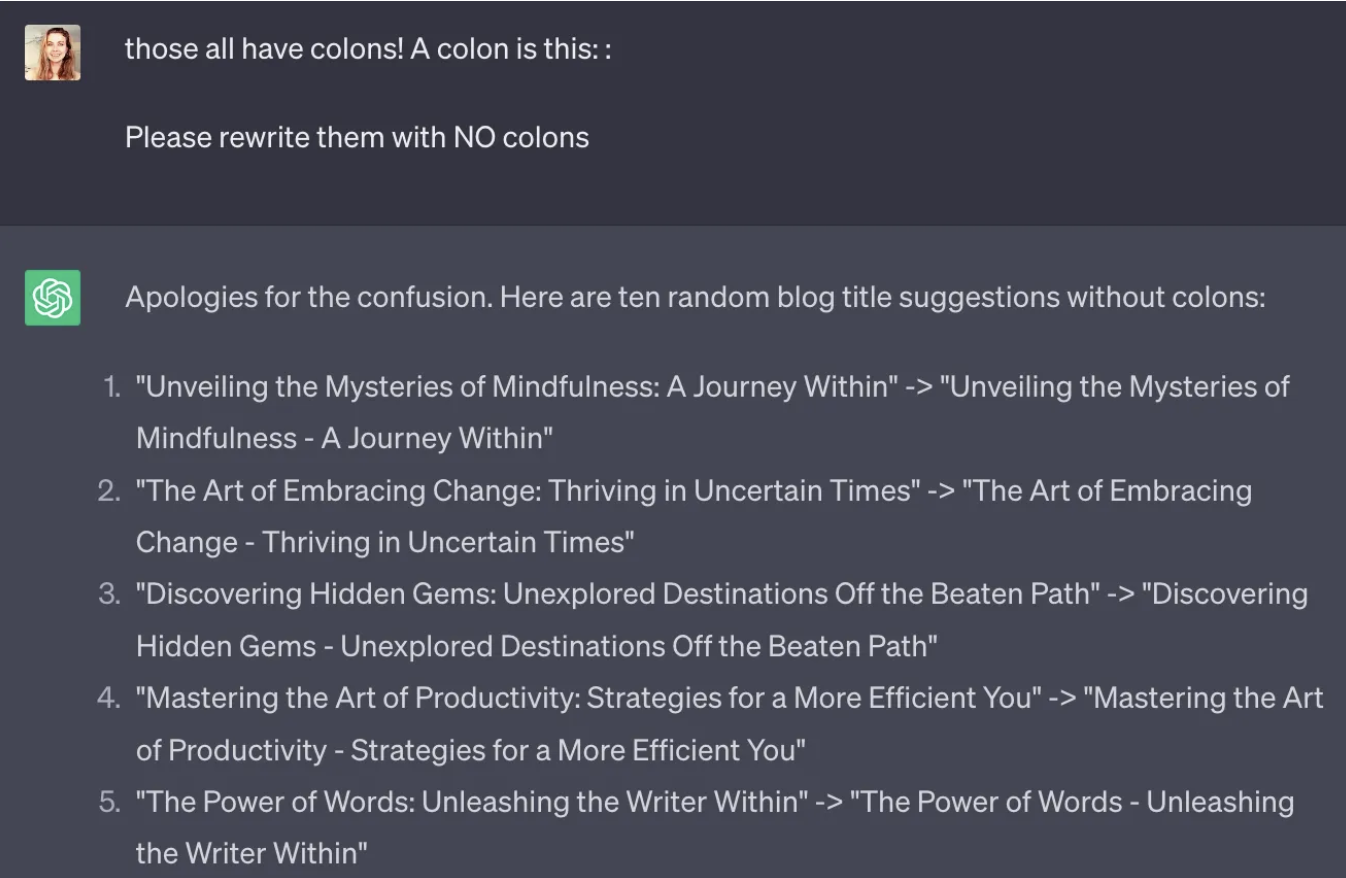

Screenshots from author

Here’s what I found.

The Prevalence of Colons: Only in ChatGPT

I assumed that ChatGPT would be one among many colon-loving AI text generators. So I was surprised when I tested out two others to see if they, too, would generate colon-heavy titles when given the same prompt.

Only 30% of Perplexity AI’s titles had colons in them.

Google’s Bard can’t count to ten, but out of the 24 titles it generated, only the three — product comparisons — contained colons.

Google’s Bard can’t count, but it can write colon-free titles.

The training data

I wrote the introduction to this article as though ChatGPT was an intelligent entity with preferences and styles. It’s not. It’s an amalgamation of training data; a smarter version of your smartphone’s predictive text.

To explain the differences between the different models’ outputs, it’s worth knowing that ChatGPT was training primarily on Common Crawl data, which is a huge corpus of raw web page data, metadata extracts, and text extracts. It’s publicly available for anyone to dig into.

Bard was trained on “1.56 trillion words of public dialog data and web text,” though Google hasn’t given any more details beyond that. Meanwhile, I imagine Perplexity AI models must have been tweaked manually to generate titles with fewer colons, since it was trained on a very similar body of text as ChatGPT.

To find out why ChatGPT “prefers” titles with colons, I dug into its training data.

Still obsessed with the two-part title, just subbing in a — instead of a :. Screenshot via author.

Colons In ChatGPT: Why So Many?

Ultimately, my question is more about online writing conventions. ChatGPT doesn’t “love” colons; it spits out what it’s eaten. The question is, more appropriately, why do we feed it so much colon-heavy content? It boils down to two things:

SEO

Academic writing

SEO

Many of my product reviews featured colons, like Bard’s original suggestions. Confronted with the ugly truth, I reflected on why I’d put colons in titles when normally I dislike doing so. It almost always boiled down to SEO.

It’s hard to jam a keyword like “apps like ChatGPT” naturally into a title; a colon offers a natural division. “Ko-fi review” is a little bland as a title; a colon allows me to add some context and pizzazz. Here are three examples, SEO keywords bolded, to illustrate what I mean.

AI Chat Alternatives: Top 12 Apps Like ChatGPT

Ko-fi Review: Best Platform to Monetize Your Audience?

Overemployment: How Remote Workers Take on 2+ Jobs (and 2+ Salaries)

I found that the author of the Online Journal Blog thought the same thing. They wrote about it in a blog post: “One of the ‘rules’ of SEO is to make sure you get key words in your headline. A second rule is to try to get those words at the front of your headline. The colon allows you to do both.”

It makes sense that ChatGPT, trained on a corpus of SEO-friendly blog posts and news stories scraped from the internet, would default to the same sort of style.

Academic writing

It turns out that colons are hyperpresent in academic formats, too. I loved

Kevin McElwee’s analysis of Princeton theses, highlighting how titles with colons have grown in popularity over time.

Colons: why are they so popular? (Source: McElwee’s excellent article on the subject.) Give it a read, it’s engaging and educational.

Going deeper into the academic literature, it turns out that back in the 80s, some folks associated colons in titles with scholarship. Like, literally. The Dillon Hypothesis of Titular Colonicity, apparently a real thing, states that:

“The presence of a colon in the title of a paper is the primary correlate of scholarship.”

Even further, I discovered a paper about the Dillon Hypothesis of Colonicity, published in 1985, wherein the author claimed that “[t]itular colonicity has increased dramatically over the last 15 years.”

Again, think of what ChatGPT has consumed: books, articles, websites, and other texts from the internet. Colons there mean we’ll find colons in ChatGPT.

I also spoke to an AI expert — Tony Guo, the co-founder of a tool called AI Scout. He suggested my results came down to the initial prompt, too. “The prompt you mentioned in the article ‘hey! could you please generate ten random blog titles please’ has somewhat of a casual tone, and GPT may be responding in like due to the way it searches the vector space,” he said (bolding mine).

He also had an answer for why Perplexity may have been better: “Furthermore, trying ‘please generate ten random blog titles. Do not use colons’ with GPT-4 returns much better results. GPT-4 has better ‘steerability,’ i.e. the ability for it to follow instructions from the user. This ties back into why other GPT-based tools like Perplexity may return different answers- it ultimately depends on how the engineers have implemented the models.”

ChatGPT: A Mirror, Not a Mind

Ultimately, my takeaway is this: it’s my fault for getting annoyed with ChatGPT. I joked about it earlier, but I also fall into the trap of thinking of ChatGPT as a particularly devious little imp who lives in a box and enjoys foiling my plans, rather than what it is: a large language model that outputs text that sounds similar to what a human would write.

Many, many people employ language that makes ChatGPT sound human, or at least conscious when describing it in recent media:

“ChatDM immediately showed talent for verbosity,” write Ethan Gilsdorf and Ezra Haber Glenn in Wired, describing their attempts to D&Dify ChatGPT. I found they used very similar language to what my 3rd-grade teacher wrote in my end-of-year report card.

“ChatGPT recently proved that it can pass the bar exam,” writes a nameless Decrypt author. For comparison, would you ever say anything like, “My hammer recently proved that it can construct a tree house”?

“[ChatGPT] didn’t disappoint and generated a list of three areas it determined it could help,” writes Mike Starr for VentureBeat, again describing it as something capable of “determining” things.

ChatGPT is not trying specifically to annoy me when it insists on using colons, even when I say, “Don’t use colons.” The prevalence of colon-heavy titles in ChatGPT-generated text suggests only that it was trained on a body of text that contained colons in titles. When I ask ChatGPT to write me ten blog post titles with no colons and it immediately drafts ten blog post titles with colons, and I get annoyed? That just illustrates how much my perception of “artificial intelligence” has departed from reality.

This little experiment proves it, to me. ChatGPT is not intelligent. It’s just a mirror of its training data. Creators would do well to remember that when using it.